When was the last time you dialed an interested prospect for your product or service?

If it’s been a while, we totally get it. At times, we’ve all questioned whether calling is worth the effort. After all, 79% of unidentified calls go unanswered (HubSpot).

Last year, we decided to challenge the idea that calling is dead.

Over 90 days, we tested a simple but bold theory: outbound calling is not only alive—it’s thriving, even at a historically product-led growth company.

Here’s how we did it and why you should try it, too.

Building the Hypothesis: Where Does a Sales Call Fit?

Historically, our approach had been to guide most incoming leads toward self-service conversion within our product. Sales engagement was reserved for high-intent signals and strong ideal customer persona fits.

But our customers, especially those in the coaching industry, routinely reach out to all prospects who show indications of interest, and they see massive success doing it.

If our customers are using this approach effectively, why wouldn’t we test it ourselves?

At the same time, our marketing team had been refining how we attract and qualify leads.

They challenged us to take a more direct role in understanding our trial users - not just whether they were a great fit, but how they found us, what they were looking for, and what nudges might help them convert. This alignment between sales and marketing gave us the perfect opportunity to experiment.

We wanted to challenge a key assumption: Is there a role for outbound calls, even for leads that don’t fit our ICP criteria? Could proactively reaching out improve overall sales outcomes, even in a motion that typically leans away from high-touch engagement?

Does prioritizing outbound calling at the top of the funnel, regardless of lead profile, create both a brand moment and a meaningful conversion lift? Could it help us uncover hidden opportunities while reinforcing the self-service journey? And if we couldn’t perfectly quantify the impact, would it still be worth it if it led to more engaged, happier prospects and customers?

Framing the Experiment: Prioritizing Speed and Focus

To convince our leadership of the need to devote resources to this idea, we set up a structured 90-day test. The goal was simple: Reach out to new trial signups as quickly as possible and study the impact. Would we see better conversions? Would it change how we qualify leads? Would it uncover new revenue opportunities? What would the prospect’s feedback be?

To keep the experiment focused, we divided our efforts:

- The core sales team stayed focused on calling ICP leads who already showed strong signals that they were a good fit, but with the goal of increasing their time to first response.

- One rep was tasked and incentivized with reaching out to non-ICP leads. The goal wasn’t just to see if they converted but to identify unexpected patterns—could some of these leads turn into valuable customers despite not matching our traditional criteria?

Our overarching goal was to disqualify people who aren’t a good fit as quickly as possible.

This could mean letting them know our product doesn’t suit their needs, or moving them to a more self-service path to purchase.

Ultimately, quickly disqualifying leads helps our team stay focused on the people who need their help.

Setting it Up: How Our Team Used Close to Build the Foundation for This Process

Now that we had our structure and goal, it was time to set up the actual process.

And thankfully, we work with one of the most powerful CRMs for outbound calling: Close. 😎

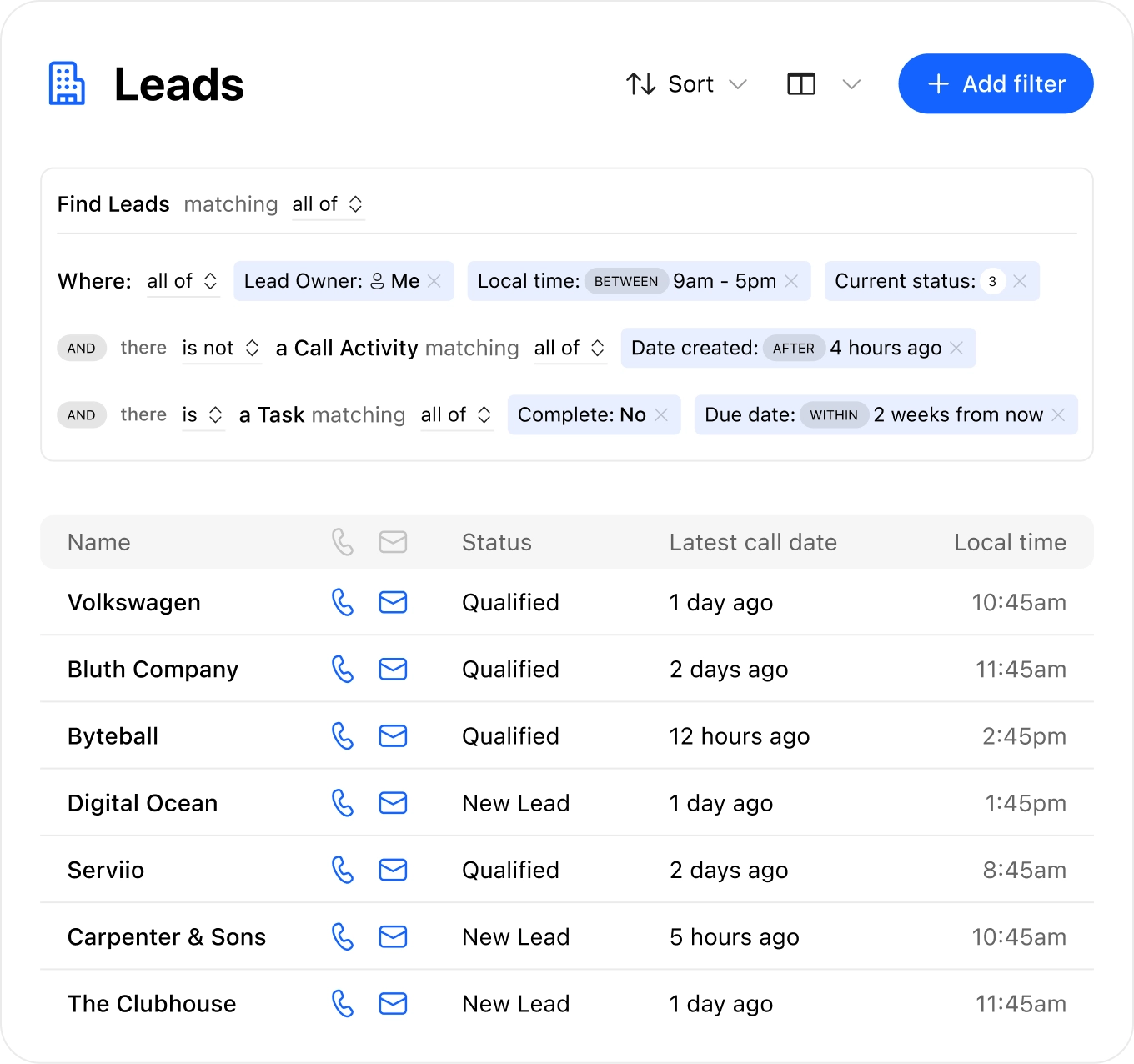

First, we set up very specific calling lists using Smart Views.

Smart Views in Close are filtered lists you can save. New leads enter as they match your filter criteria, and leave when they no longer match.

We have three calling lists for new trial signups meeting our ideal customer criteria—one for each of our industry profiles. That way, when we talk to these people, we can reference real problems they face in their industry and provide them with real solutions that other customers in that industry have seen with us.

We live and die by Smart Views, and all calling starts from there.

Calling happens from our group number for the sales team, so if someone returns a missed call, they’ll have the best chance of reaching our team.

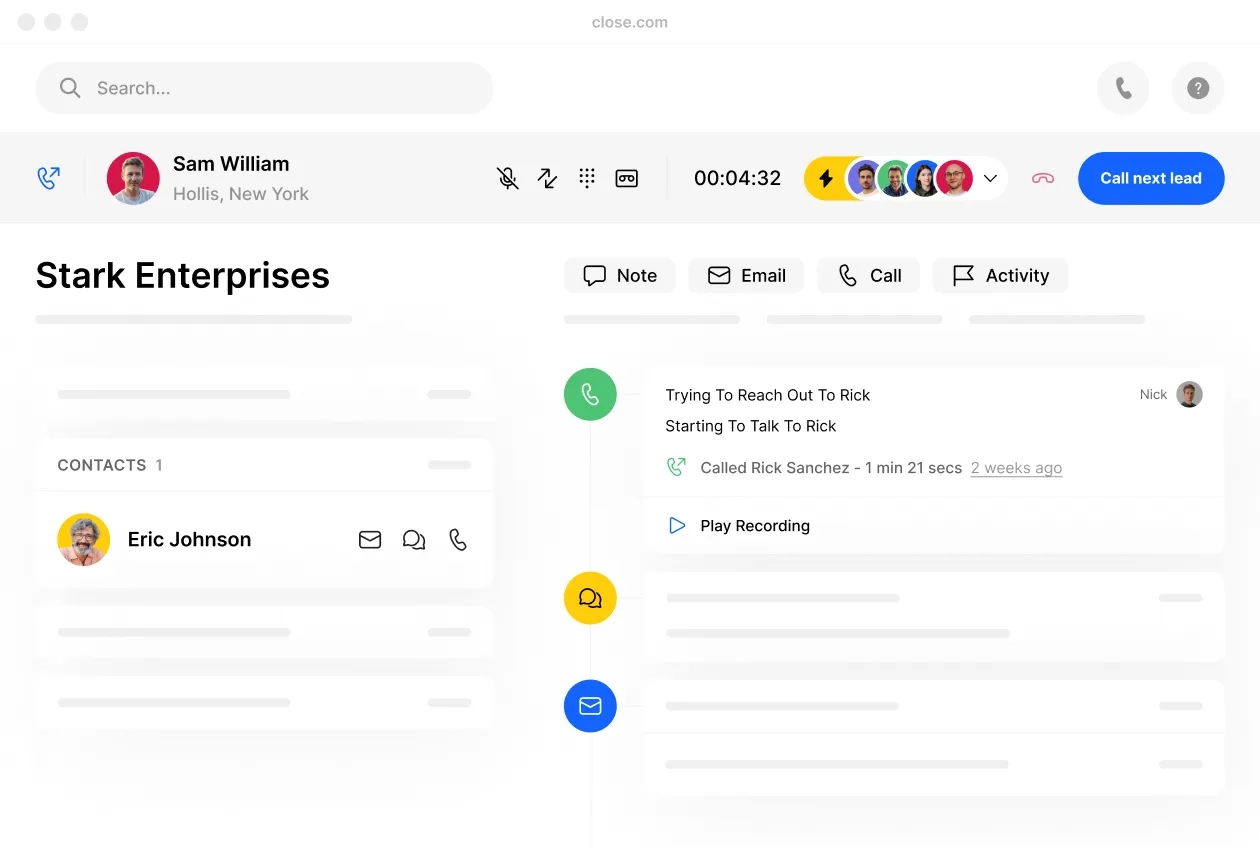

Most of the time, we’ll use the Power Dialer to call through the Smart View list. This calls through the list automatically showing you each lead page as you call.

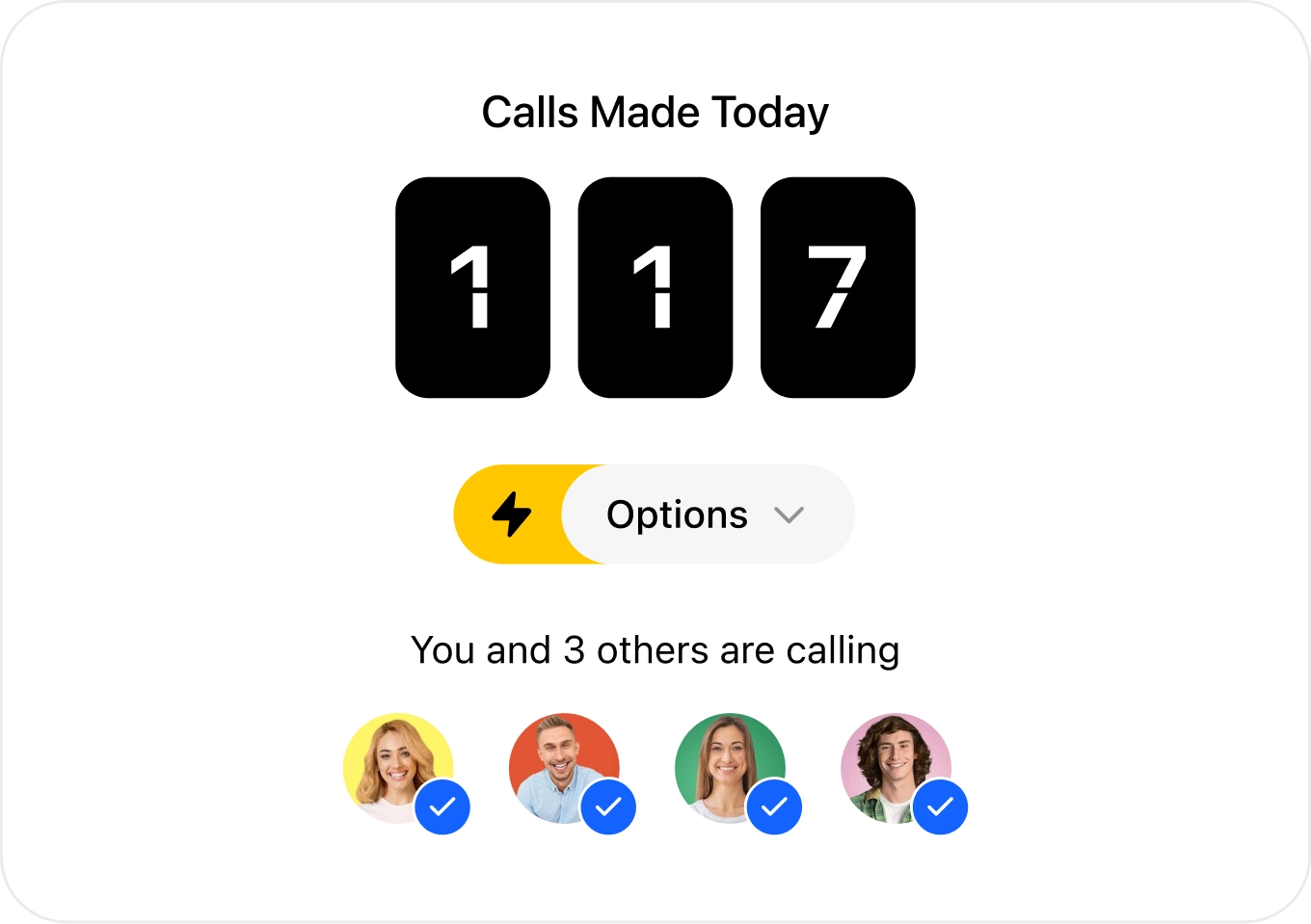

Of course, it’s even better to use the Predictive Dialer—this tool allows our team to dial multiple numbers at once, and we only get connected to someone when a real human answers. It’s literal gold, but you need at least four people calling simultaneously for it to work.

There’s also a psychological aspect to calling together. It’s fun to have a Predictive Dialing session together, knowing you’re all doing the same thing, and then chatting about it in Slack.

Lastly, we have a Custom Activity for disqualification. When a rep is on the phone with someone who isn’t a good fit for Close, they can open that Custom Activity and mark the reason for disqualification.

Of course, whenever we talk to someone who is a good fit for Close, we send them a booking link and get them set up with a discovery call.

How This Experiment Led to a Change in How We Do Sales at Close

As we set up the logistics of this experiment, we realized we needed to make some changes in our sales process.

Before we started calling free trials, our sales team had open calendars and would send out lots of booking links. The result: each rep had 10 to 15 meetings every day.

In theory, that sounds great. But when those meetings aren’t with the right people, they can be a massive waste of time.

We were being reactive instead of proactive.

The first immediate change was asking the team to block time for calling. This allowed them to be more proactive, reaching out quickly to new leads and disqualifying those who weren’t a good fit for Close.

With our new process, our team reserves time for people who are truly interested and need support through the sales process.

Once we see someone is interested and qualified, we pitch a discovery meeting. The goal is to provide a proof of concept, to go really in-depth on their problems, and see what they want from a CRM. From there, we can advise them on whether Close is the right fit and how to set it up for their use case.

These changes in our process have allowed us to be much clearer on who we’re selling to and what the next steps are once we qualify or disqualify someone.

Hitting the Phones: What We Said and How We Said It

When the team is calling warm leads, you need a quick-hit script that allows you to get to the point and avoid wasting anyone’s time.

Our calls were more supportive before. Think: We see you’re in a trial. How can we help?

And while that’s nice, it wasn’t helping to move deals forward.

Now, our calls look something like this:

- A quick introduction of who we are

- Ask: How’s the trial going? Anything we can help with?

- And then the big question: What do you need to see from us in order to buy?

This part of the call helps us move deals forward, and we’ll see a variety of responses. Some people are already sold, and simply wait until the end of the trial to put in their credit card. Some may be doing a trial of Close while also looking at competitors, in which case we can help them get the most out of their trial to see what they really need.

And in general, people respond really positively to these calls.

They may have questions about the product, and we could spend two minutes or 20 minutes helping them get set up in the trial.

But the best reactions we get are the ones we call closest to when they start the trial.

I’ve even had several people tell me, “You read my mind! How did you know I was looking at your product right now? You caught me at the perfect time.”

Overall, this change helped us focus our calls more and made us rethink how we onboard new trials.

How This Experiment Changed the Way We Onboard New Trials

When I took over the sales team earlier this year, we implemented an onboarding checklist. This was a list of 10-15 actions prospects could take inside the product during the trial.

We knew once people took these actions, they were more likely to stick around after the trial ended. So, our sales team was working hard to ensure people completed this checklist during the two weeks of their trial.

The reasons behind this were solid in theory, but in practice, we didn’t see the results we expected. Instead, we realized that we were overwhelming these people.

For example, if I join a new gym because I want to use the treadmills, but the gym owner spends an hour showing me how to use every other piece of equipment in the gym, I’ll be overwhelmed. Really, I just wanted to see the treadmills.

The same thing was happening with our trials.

Instead, we decided to focus on the one question I mentioned above: What do they need to know about Close to decide if it’s the right fit?

Once we moved away from the onboarding checklist and focused on what the trial user wanted to see in those two weeks, we could give them a proof of concept. Later on, we can trickle in more information about our more advanced features, because we know that successful customers do these things. But, in the short term, what do they really need to see?

We changed our focus from trying to sign them up for a year to just bringing them on for the first month. Because we believe in our product so much, we know that after the first month, they’ll find more and more features to try without the overwhelm of doing it all at once.

Analyzing the Results: What Changed and What Didn’t

We’ve made it through the 90 days of this original experiment. So, what did we learn?

Let’s start with the data (as of the time of writing):

In short, pretty much everything is up.

Besides this, July and August were our best months for new MRR in over 14 months. Of course, a lot of factors go into these net business results. But my hunch is that the machine is operating better overall—marketing is sending us better leads; we, as a sales team are also offering the marketing team more information about who is and isn’t a great lead.

The sales team’s main concern is still making sure they’re using their time in the best way.

My job is to consistently remind them that the work they’re doing on calling new trials is helping us find the right prospects to talk to.

When talking with one team member, I showed them the data—the graph showed their meetings had dipped substantially, but the revenue amount they had closed was increasing during that same period.

This proves the point—they may be spending less time in meetings, but because they’re so focused on the right people, they’re closing more high-value deals.

And that’s exactly what we want.

Here are some quick success stories that prove the point:

- One new trial said, “I wasn’t expecting a phone call. I really like that personal touch.” He immediately booked a demo with the sales team.

- One team member called a prospect and asked how the trial was going. The prospect replied: “I don’t like it.” Throughout the call, our rep was able to explain more about how the product worked, and eventually, this person converted into a paying customer. Without that initial outreach, she probably would’ve gone silently in another direction.

- I was using our Smart View to call new trials and spoke to someone who had signed up just two minutes before. He told me, “I’m in the platform right now, wondering how to set it up, and then you called!” He loved that I was using our own product to call him.

Calling new trials works—more complex deals are getting the extra love they need, and even more self-service-focused prospects are closing at a higher rate with just a quick call from our team.

Key Takeaways from the Experiment: Here’s What We Learned

Our experiment was a success, and we’re continuing to call new trial signups regardless of persona.

The takeaways?

Speed matters! Not necessarily calling within minutes, but reaching out while the interest and intent are still fresh. Waiting even a day meant trial users often forgot why they signed up or had already moved on to evaluating other tools. By calling early, we positioned ourselves as a helpful guide in their decision-making process, rather than just another option in a crowded market.

We also saw firsthand how calling played a role beyond pure conversion. For many trial users, an early sales touchpoint clarified their path, whether that meant reinforcing the self-service journey or helping them see the value of a higher-tier plan they hadn’t considered. And while not every conversation led to an immediate sale, it strengthened relationships and set the stage for future opportunities.

Even in a self-serve-driven model, there’s room for strategic sales engagement. Proactive outreach doesn’t have to replace product-led conversion, it can enhance it. A well-timed call creates a brand moment, accelerates qualification, and uncovers potential deals that might otherwise be overlooked.

So, is high-volume calling worth it for a PLG sales team?

The answer isn’t one-size-fits-all, but for us, this experiment proved that making outbound a core part of our strategy, especially for trial users who show interest, can drive both immediate and long-term gains.

If you’re ready to experiment with a change in your process, here’s how I would do it:

- Give yourself a timeline, write out what you’re hoping to learn, and consider what needs to happen for you to learn something.

- To get buy-in from your team and from company leadership, make it clear that if you don’t reach your goals or learn what you expected, you’ll go back to the way you were doing things before.

- Experiments are less overwhelming when they start with a clear point of focus and a goal.

Remember: An experiment is only a failure if you don’t learn anything. We learned a lot with our experiment, and we’re continuing to iterate and improve based on what we learned.

Keep making mini improvements to your process, and you’ll learn faster and make more progress. If you try an experiment like this, drop me a line and let me know how it goes!